REDIS-CLUSTER集群slot迁移过程分析

版权声明 本站原创文章 由 萌叔 发表

转载请注明 萌叔 | https://vearne.cc

1.前言

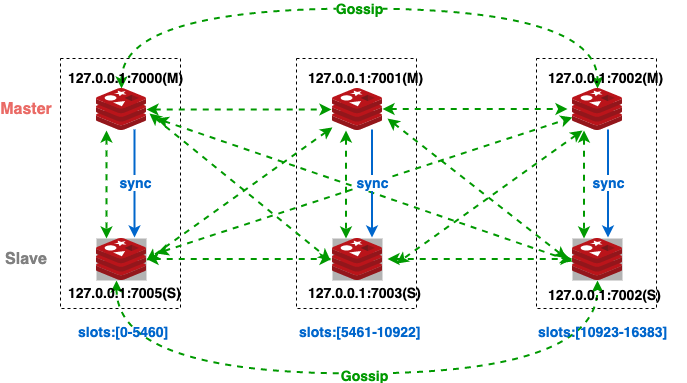

在前面的文章中,

《REDIS-CLUSTER集群创建内部细节详解》

萌叔创建一个Redis集群。

这篇文章,我会为集群添加2个节点,并介绍slot的迁移过程。

2.集群扩容

添加主节点127.0.0.1:7006

redis-cli --cluster add-node 127.0.0.1:7006 127.0.0.1:7000

添加从节点127.0.0.1:7007(并指定其Master节点)

redis-cli --cluster add-node 127.0.0.1:7007 127.0.0.1:7000 --cluster-slave --cluster-master-id 86f3cb72813a2d07711b56b3143ff727911f4e1e

新添加的节点上并没有slot分布,需要通过命令让slot重新分布

root@BJ-02:~/cluster-test/7007# redis-cli --cluster reshard 127.0.0.1:7000

>>> Performing Cluster Check (using node 127.0.0.1:7000)

S: 7eb7ceb4d886580c6d122e7fd92e436594cc105e 127.0.0.1:7000

slots: (0 slots) slave

replicates be905740b96469fc6f20339fc9898e153c06d497

M: 86f3cb72813a2d07711b56b3143ff727911f4e1e 127.0.0.1:7006

slots: (0 slots) master

1 additional replica(s)

M: be905740b96469fc6f20339fc9898e153c06d497 127.0.0.1:7005

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 9e29dd4b2a7318e0e29a48ae4b37a7bd5ea0a828 127.0.0.1:7007

slots: (0 slots) slave

replicates 86f3cb72813a2d07711b56b3143ff727911f4e1e

S: 603a8a403536f625f53467881f5f78def9bd46e5 127.0.0.1:7003

slots: (0 slots) slave

replicates 784fa4b720213b0e2b51a4542469f5e318e8658b

M: 4e0e4be1b4afd2cd1d10166a6788449dd812a4c0 127.0.0.1:7002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 585c7df69fb267941a40611bbd8ed90349b49175 127.0.0.1:7004

slots: (0 slots) slave

replicates 4e0e4be1b4afd2cd1d10166a6788449dd812a4c0

M: 784fa4b720213b0e2b51a4542469f5e318e8658b 127.0.0.1:7001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 0

How many slots do you want to move (from 1 to 16384)? 1

What is the receiving node ID? 86f3cb72813a2d07711b56b3143ff727911f4e1e

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all

Ready to move 1 slots.

Source nodes:

M: be905740b96469fc6f20339fc9898e153c06d497 127.0.0.1:7005

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 4e0e4be1b4afd2cd1d10166a6788449dd812a4c0 127.0.0.1:7002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 784fa4b720213b0e2b51a4542469f5e318e8658b 127.0.0.1:7001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

Destination node:

M: 86f3cb72813a2d07711b56b3143ff727911f4e1e 127.0.0.1:7006

slots: (0 slots) master

1 additional replica(s)

Resharding plan:

Moving slot 5461 from 784fa4b720213b0e2b51a4542469f5e318e8658b

Do you want to proceed with the proposed reshard plan (yes/no)? yes

Moving slot 5461 from 127.0.0.1:7001 to 127.0.0.1:7006:

3. Slot迁移过程

迁移过程在clusterManagerMoveSlot() 中

static int clusterManagerMoveSlot(clusterManagerNode *source,

clusterManagerNode *target,

int slot, int opts, char**err)

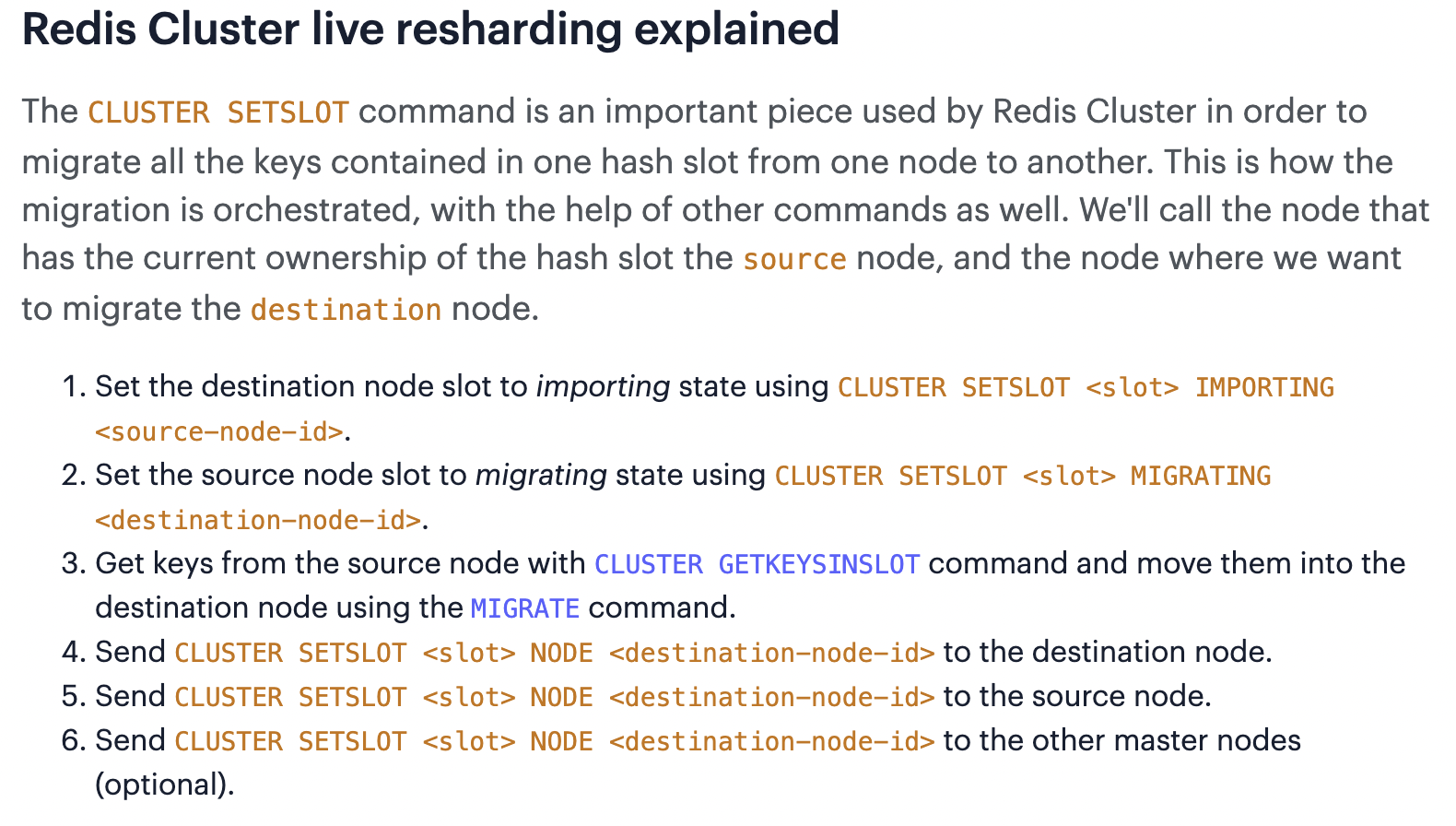

基本过程与官方文档(详见参考资料2)基本一致

3.1 设置状态

3.1.1 client -> destination-node

将destination-node的状态设置为IMPORTING (导入)

告知:它将从source-node导入某个slot

CLUSTER SETSLOT <slot> IMPORTING <source-node-id>

3.1.2 client -> source-node

将source-node的状态设置为MIGRATING (迁出)

告知:它将把某个slot导出给destination-node

CLUSTER SETSLOT <slot> MIGRATING <destination-node-id>

这2步动作很重要,

1) source-node可以明确的知道当它收到关于不存在key的请求时,可能需要回复ASK重定向

2) destination-node可以明确的知道它该如何处理一个ASK请求

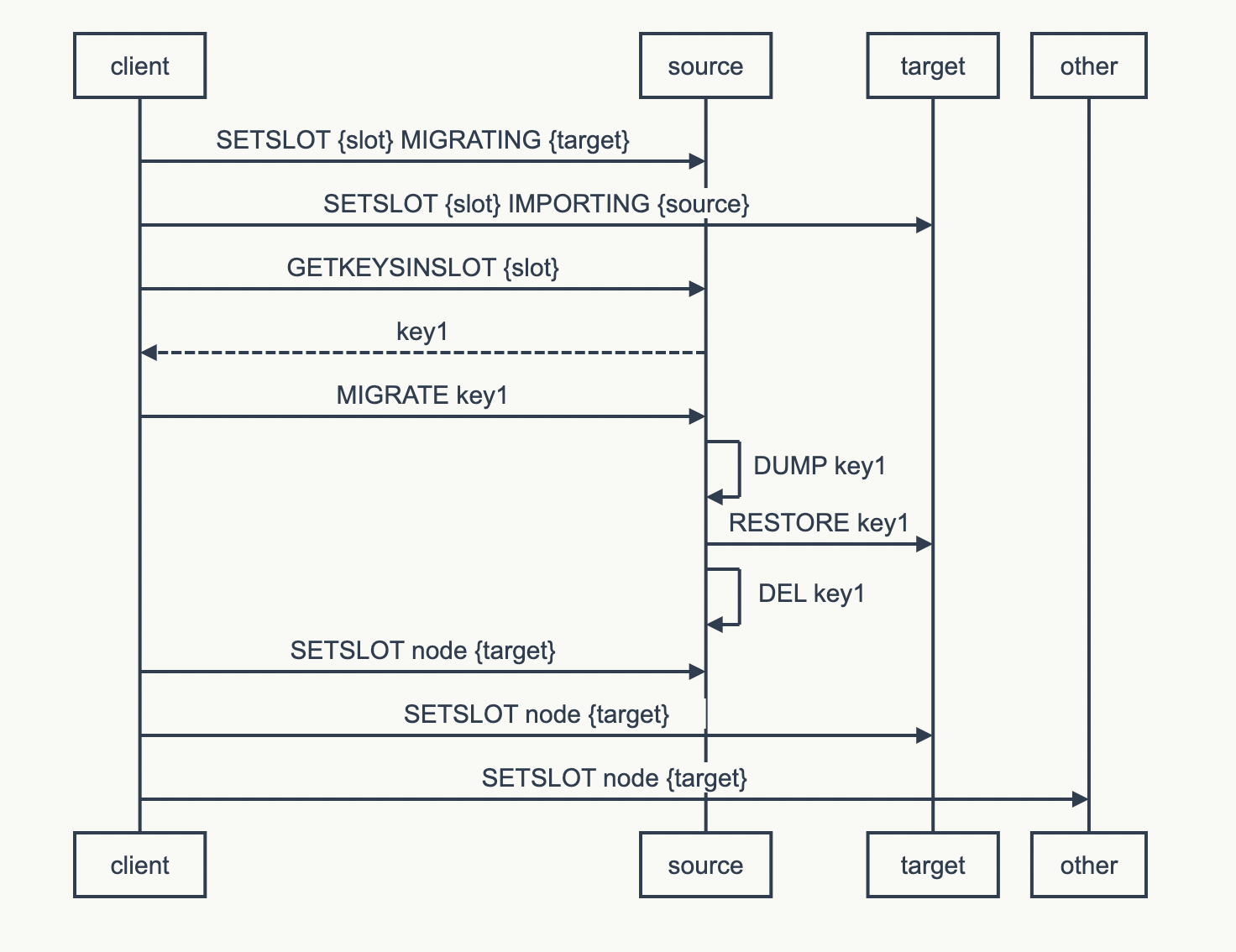

3.2 迁移Slot

将给定Slot上的所有Key从source-node迁移到destination-node

涉及函数

clusterManagerMigrateKeysInSlot()

在整个Slot的迁移过程,客户端全程都是需要参与的。当然如果客户端在这期间崩溃,结果会很糟糕,source-node的状态将始终处于IMPORTING,

destination-node的状态设置为MIGRATING

step1: client -> source-node

CLUSTER GETKEYSINSLOT <slot> <pipeline>

pipline默认值是10

示例

> CLUSTER GETKEYSINSLOT 7000 3

1) "key_39015"

2) "key_89793"

3) "key_92937"

client从source-node获取位于给定Slot上的key列表

step2: client -> source-node

MIGRATE <destination-node-ip> <destination-node-port> "" <destination-db> <timeout> KEYS key1 key2 key3

The command is atomic and blocks the two instances for the time required to transfer the key, at any given time the key will appear to exist in a given instance or in the other instance, unless a timeout error occurs.

注意: 这个命令是原子的,且会阻塞source-node和destination-node

The command internally uses DUMP to generate the serialized version of the key value, and RESTORE in order to synthesize the key in the target instance. The source instance acts as a client for the target instance. If the target instance returns OK to the RESTORE command, the source instance deletes the key using DEL.

source-node在具体执行的过程中又涉及了3个命令

- DUMP

序列化对应的key - RESTORE-ASKING

将序列好的key传输给destination-node,并在destination-node执行反序列化。 - DEL

source-node删除对应的key

重复step1、step2直到给定slot中所有的key都被移动到destination-node

注意: 如果key非常大, DUMP和RESTORE命令执行可能会比较慢。

3.3 广播状态

Client向所有节点发送

CLUSTER SETSLOT <slot> node <destination-node-id>

告知Slot已经被迁移到了destination-node,请修改slot和node的绑定关系

同时

* source-node 会将状态 MIGRATING 清除

* destination-node会将状态 IMPORTING清除

整个过程比较复杂,因此这里提供一个时序图帮助读者理解

注意 在slot迁移过程中,客户端是全程参与的。如果客户端崩溃,source-node和destination-node的状态不会发生变化。

4. 问题

这里有2个问题让萌叔很好奇

Q: 在Slot迁移过程中,如果有key需要写入/读取, 将是怎么样的过程?如果有新key写入,是不是永远都迁移不完?

A:

"somekey"属于Slot[11058]

当前 Slot[11058]正在迁移

127.0.0.1:7002 --> Slot[11058] --> 127.0.0.1:7006

| 类别 | 地址 |

|---|---|

source-node |

127.0.0.1:7002 |

destination-node |

127.0.0.1:7006 |

| 无关节点 | 127.0.0.1:7000 |

Case 1

发往某个无关节点的请求

无论读写只会MOVED重定向到source-node

127.0.0.1:7000> get somekey

(error) MOVED 11058 127.0.0.1:7002

127.0.0.1:7000> set somekey xxx

(error) MOVED 11058 127.0.0.1:7002

Case 2

对于发往source-node的请求,分为2种情况

如果key当前在source-node上存在则处理并正常返回,

否则使用ASK error重定向到destination-node

127.0.0.1:7002> get somekey

(error) ASK 11058 127.0.0.1:7006

127.0.0.1:7002> set somekey abc

(error) ASK 11058 127.0.0.1:7006

Case 3

对于发往destination-node的请求,它只处理ASKING开头的请求,其余仍然MOVED重定向到source-node

127.0.0.1:7006> get somekey

(error) MOVED 11058 127.0.0.1:7002

127.0.0.1:7006> set somekey "abc"

(error) MOVED 11058 127.0.0.1:7002

127.0.0.1:7006> ASKING

OK

127.0.0.1:7006> set somekey "abc"

OK

127.0.0.1:7006> get somekey

(error) MOVED 11058 127.0.0.1:7002

127.0.0.1:7006> ASKING

OK

127.0.0.1:7006> get somekey

"abc"

Q: MIGRATE命令执行的过程中,如何保证原子性并同时阻塞source-node和destination-node

A:

萌叔的理解是这样的,由于Redis的数据处理线程是单线程,所以对

source-node而言,MIGRATE命令是原子的。RESTORE-ASKING命令在destination-node上的执行也是原子的。

阻塞的情况类似

- 1) 在

source-node上,MIGRATE命令由于是原子的,肯定会阻塞其它命令 - 2)在

destination-node上,RESTORE-ASKING命令由于是原子的,肯定也会阻塞其它命令的执行

5.参考资料

1.Redis 5.0 redis-cli --cluster help说明

2.CLUSTER SETSLOT

3.CLUSTER GETKEYSINSLOT